Apple AR glasses function preview, 11 patents take you to decrypt.

What would it be like if Apple released a pair of AR glasses?

It has been ten years since Steve Jobs left us, but people will still miss the amazing feeling brought by the first generation and the classic design of the iPhone 4. When it comes to the impression of the Cook era, people are keen to discuss only the "little bangs", some product details of Pro Max’s lidar scanner and Apple’s market value of $3 trillion.

What will Cook bring to Apple? The question that everyone has been thinking about seems to have a new answer recently. Recently, when Cook was interviewed by many media, he called himself the "number one fan" of AR, and even said, "We don’t talk about the meta-universe, we only talk about AR". Therefore, AR glasses are most likely to become one of the iconic products of Apple in the Cook era.

Apple’s products have always been known for their high aesthetics, high standards and strict requirements. Cook once said that there is no AR glasses on the market that can satisfy him. Just like before Apple officially launched the AppleWatch, Cook also said something similar, saying that no wearable product satisfied him. So what kind of AR glasses does Cook want to make? Can it reproduce Apple’s past glory and lead a new consumption storm again?

These questions have made people pay close attention to the dynamics of Apple’s AR devices, and they all want to know what the AR glasses made by Apple will look like. We look forward to Apple’s AR glasses with you by taking stock of the information of Apple and Apple’s AR equipment.

Of course, the related AR patents may also be applied to head displays, mobile phones, watches and even automobiles. After all, Apple is making more and more money, and the consumer business involved is getting wider and wider. However, as the first AR device, AR glasses are still very likely to be applied to these patented technologies.

What are the highlights of Apple’s upcoming AR glasses that are worth looking forward to? By reading the patent documents, we infer that Apple AR glasses may have the functions of environmental application model, object-centered scanning, establishing virtual space and providing spatial audio.

▲ Apple obtained a summary of patent information related to AR glasses in 2021.

In this year’s 11 patents, we saw three more interesting patents, which also decrypted the "true face" of Apple’s AR glasses.

1. You can draw a floor plan by taking a picture. Is it a necessary "artifact" for designers?

In the past, people mainly used tape measure to measure the room area. This method was time-consuming and laborious, but if people put on Apple’s AR glasses, they might be able to get the floor plan of the room by looking around.

Moreover, because there are objects such as tables and sofas in the indoor environment, the lines of the room will be blurred, which makes it difficult for people to build accurate floor plans according to videos and sensors.

Faced with these problems, Apple chose to measure the structure of the room and create a floor plan through 3D Point Cloud technology and 3D display technology. These technologies mark the boundaries, walls, doors and windows in the room through words or symbols, and have special measurement methods.

▲ Measure the structure of the room through the camera head.

In this way, users can preview the floor plan of the room in real time while wearing AR glasses.

2. How will Apple create a "metauniverse"? Build an avatar to play first.

Apple has previously released the function of imitating my expression, which can imitate the user’s expression synchronously. Originally, the mimetic expression only imitated the user’s head movement, but now this range can be extended to the whole body. This patent is mainly designed for HMD at present, but it may be applied to some AR products in the future.

This technology will collect your posture information through multiple cameras, and you can customize a virtual avatar. Similarly, your avatar will change according to your movements. For example, if you talk and gesture, your avatar will also talk and gesture. This will enrich the creative form of video, and it may have a wider application range when linked with game products.

▲ Similar to Doug Roble, director of software research and development in Digital Domain, and his avatar.

3, spatial touch/audio: Apple "patted" your head and made you turn around.

In terms of enhancing spatial tactile perception, AR glasses will shift users’ attention to virtual phone conference or other places through tactile guidance. For example, users wearing AR glasses can feel that the pattern in front of them is moving to the left, and prompt users to turn to the left by vibration.

▲ FaceTime has updated the functions related to spatial audio.

Similar to the guidance mode of spatial touch, spatial audio mainly adjusts the sound change according to the user’s head change. This technology will monitor the head changes of users through image sensors, and also provide filters and visual guidance, so that users can have a better music experience.

At present, only AirPods Pro, AirPods Max and Xiaomi True Wireless Noise Reduction Headset 3 Pro support spatial audio applications, and there are not many other products that support spatial audio functions.

4, AR knows people/cars: specially built for Didi? Never have to worry about getting on the wrong bus again.

During the rush hour, there are often many network cars waiting for their customers on the roadside, but because of the large number of vehicles, customers are often prone to get on the wrong bus. In response to this situation, Apple’s new patent has created an AR system. When you wear AR glasses, you may be able to find your own network car at a glance from many vehicles.

The system will join hands with the online car software and provide two-way AR identification service, that is, drivers can find their customers in the crowd in time, and customers can also find their online car in the car sea in time. Since Apple invested one billion dollars in Didi Chuxing in 2016, and according to public information, Apple did not invest in other taxi software, therefore, this AR system may be specially built for Didi.

▲ Frame diagram of augmented reality system for proximity sensing.

The system can capture images through the camera of the mobile device, identify the corresponding vehicles and passengers with indicators or identifiers, and display them on the device. By simply displaying the signs, you will avoid the embarrassment of getting on the wrong bus during the rush hour.

What kind of experience will Apple’s AR glasses bring to people, and can they really help consumers in their daily lives? As the next generation of smart wearable devices, AR glasses play an important role in displaying information in the digital world for consumers, which can accelerate the combination of information in the digital world and the physical world. However, AR glasses on the market still have some shortcomings in data transmission and information display.

According to patent documents, Apple is giving new solutions to these problems through point cloud and virtual information display technology.

1. Overcome the data problem and speed up the data through point cloud compression.

Point cloud mainly refers to the data set of the midpoint in space, and usually 3d scanner obtains the relevant data of three-dimensional objects. Apple’s LiDAR lidar scanner on the iPhone 12 Pro series can support data collection and transmission of point clouds.

The new patent report indicates that "point cloud compression" can be formed by lidar scanner, providing more information for reality.

This technology can be used in VR, AR, geographic information system serving maps, sports replay broadcasting, museum display, autonomous navigation of Project Titan and many other projects.

2. The gospel of the road-crazy party: displaying virtual guidance information in the real environment.

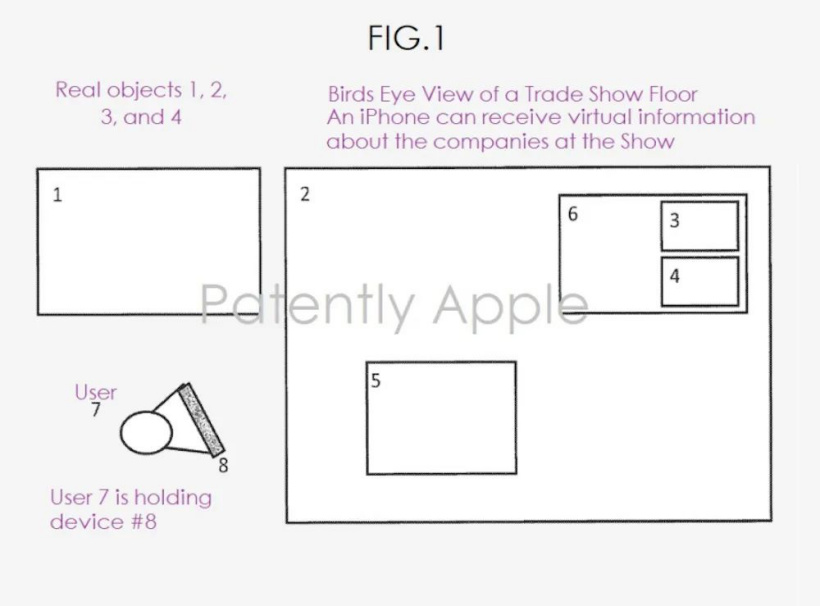

In September this year, Apple obtained a patent related to displaying virtual information in a real environment, which allows users to locate and navigate to their destinations through mobile devices.

At present, the information service in the AR field usually needs to be completed by different technology combinations, and it is impossible to update the information in time according to the changes of the scene. In addition, some conventional GPS display technologies often cannot provide accurate location information.

The new system will display at least one piece of virtual information according to the real scene data, and will also display remote virtual information with low accuracy.

▲ Display the patent schematic diagram of virtual guidance information in real environment.

Specifically, when the user is close to the target area, the relevant information of other irrelevant areas will gradually disappear or be roughly displayed, and the digital information in the target area will be more detailed. Not only that, as people get closer to the target area, the relevant data will be more accurate.

To create a product that can cause a new consumption storm, we need not only technical support, but also innovative and creative talents as a strong backing.

As one of the first players in AR layout, Apple has an AR research team of nearly 1,000 people. According to Bloomberg News, the executive in charge of Apple’s AR business is Mike Rockwell, and his superior is Dan Riccio, Apple’s senior vice president of hardware engineering.

Mike Rockwell, a former executive vice president of Dolby and a senior researcher at Dolby Labs, is now one of the "candidates" for Apple’s next CEO.

▲ Mike Rockwell (Source: Patently Apple)

The team also includes new employees involved in video games, audio guidance, computer vision and special effects, as well as old Apple employees with rich experience in supply chain and program management. For example, Fletcher Rothkopf, who was in charge of watch design at Apple, and Tomlinson Holman, who created the sound standard THX.

In recent years, Apple has successively recruited talents from all over the world to expand its own AR R&D team. Previously, Apple had poached Li Zeyu from Magic Leap to be its own advanced computer vision algorithm engineer.

Not only that, Cody WHite, the former chief engineer of Amazon’s Lumberyard virtual reality platform, and Avi Bar Zeev, who worked in Hololens and Google Earth, have joined Apple’s VR/AR research team one after another, but interestingly, these two experts have left their jobs for reasons that have not been disclosed.

In addition to the talent pool, Apple has also strengthened its technical pool by acquiring a number of companies.

After Apple acquired Israeli hardware company PrimeSense in 2013, Apple successively acquired more than ten companies related to the AR field, involving sensors, AR software, AR content ecology and even AR lenses.

▲ Apple’s acquisition of company information related to AR glasses over the years.

To sum up, Apple has made a good talent reserve and technical reserve in the AR project. Now, everything is ready, except for the formal product launch and the test of the market.

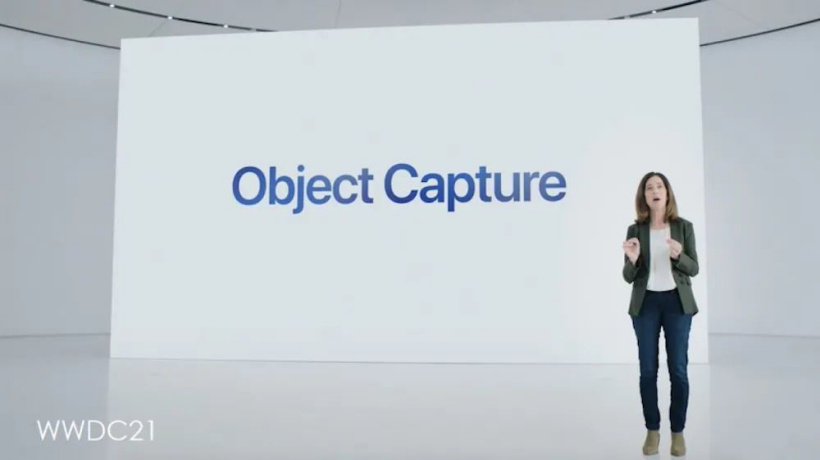

Although Apple’s AR glasses have not yet officially appeared, Apple has already made many attempts in the AR field, not only bringing ARKit 5 system platform to the 2021WWDC conference, but also launching many AR-related functions such as Object Project and RealityKit 2.

Apple said that at present, 7,000 developers have developed 10,000 iOS apps that support AR. During the epidemic, people can purchase goods or complete home decoration through related AR software.

Not only developers can create their own products on ARKit platform, but also some amateur AR enthusiasts can DIY their own AR software on ARKit development platform.

In addition, the Apple TV+launched by Apple at this autumn conference may also become an important source of its future content ecology.

Although Apple has not launched its own AR software store, its AR content ecology and platform planning can gradually take shape, which will become one of the outstanding advantages of Apple AR glasses.

Strong technical library support, excellent R&D team and technical personnel, and rich content ecology, it seems that Apple AR glasses have occupied many advantages before the release, but for a consumer-grade product, performance is the standard to test everything, and it is also the most important grasp of the product.

In terms of chips, according to The Information, the chip design of Apple AR glasses has been completed, and now it has entered the trial production stage.

According to the report, the chip uses TSMC’s 5nm process technology, with CPU and GPU, but no neural engine belonging to Apple. It is reported that Apple’s chip may be connected to some host devices through wireless communication, and the host will bear the computing power.

Not only that, according to industry news, the CMOS image sensor version of this SoC is extremely large, and its size is close to that of a head-mounted lens. This may be to capture high-resolution image data from the user’s surrounding environment faster and provide better scene rendering or other AR services.

In addition, Apple’s AR glasses may be more lightweight and split-type, and Bloomberg has also said that Apple will produce a special version of Jobs. Major designers in the industry have also used their brains to "help" Apple design new AR glasses.

Korean designer Martin Hajek designed the concept map of the new AR glasses with reference to the dial of Apple Watch and the color matching of iPhone.

▲ The appearance of Apple AR glasses designed by Korean designer Martin Hajek

Judging from the design released by Martin Hajek, a three-dimensional artist in the Netherlands, Apple AR glasses may be similar to ordinary glasses. He used metal material in the frame, and placed a wireless antenna and sensor inside, with WiFi and Bluetooth parts on both sides of the frame.

▲ The appearance of Apple AR glasses designed by Dutch artist Martin Hajek

On the display side, Guo Ming said that Apple’s AR glasses may use OLED screens. Since Apple has previously acquired a company related to AR lenses, it is very likely that Apple will develop its own AR glasses.

In terms of whole machine OEM, Goer is currently the world’s leading OEM player of high-end AR/VR equipment, and once worked for Sony and Facebook. According to industry forecast, the AR/VR equipment assembly of Goer shares will account for 80% of the global market in 2021. Since Goer has also OEM AirPods and other related products for Apple before, based on its long-term cooperative relationship with Apple, the OEM of Apple’s AR glasses may be undertaken by Goer.

We can see that Apple has invested a lot in technology, talents and funds for its own AR glasses, and also tried to bring some new ways to play the display technology.

The concept of AR glasses has been around for a long time, but no mature products have appeared. For example, although Microsoft and Google have released some smart glasses products, they have not been widely recognized by users. Steven Law Corasa, head of AR at Adobe, once said that "Head Display, AR and other technical products are in our roadmap, but none of them have reached the critical quality that we can deploy."

The release of Apple’s AR glasses has become a major event of concern to the whole industry. Every time Apple’s press conference, we see that everyone pays close attention to Apple’s AR glasses. According to the industrial chain news, Apple will launch this AR glasses around 2023. Whether the upcoming products can cause other software, hardware and content eco-players to join the AR market, we will continue to pay attention to the latest trends.